[Paper Review] NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis

Input Data

transforms_train.json

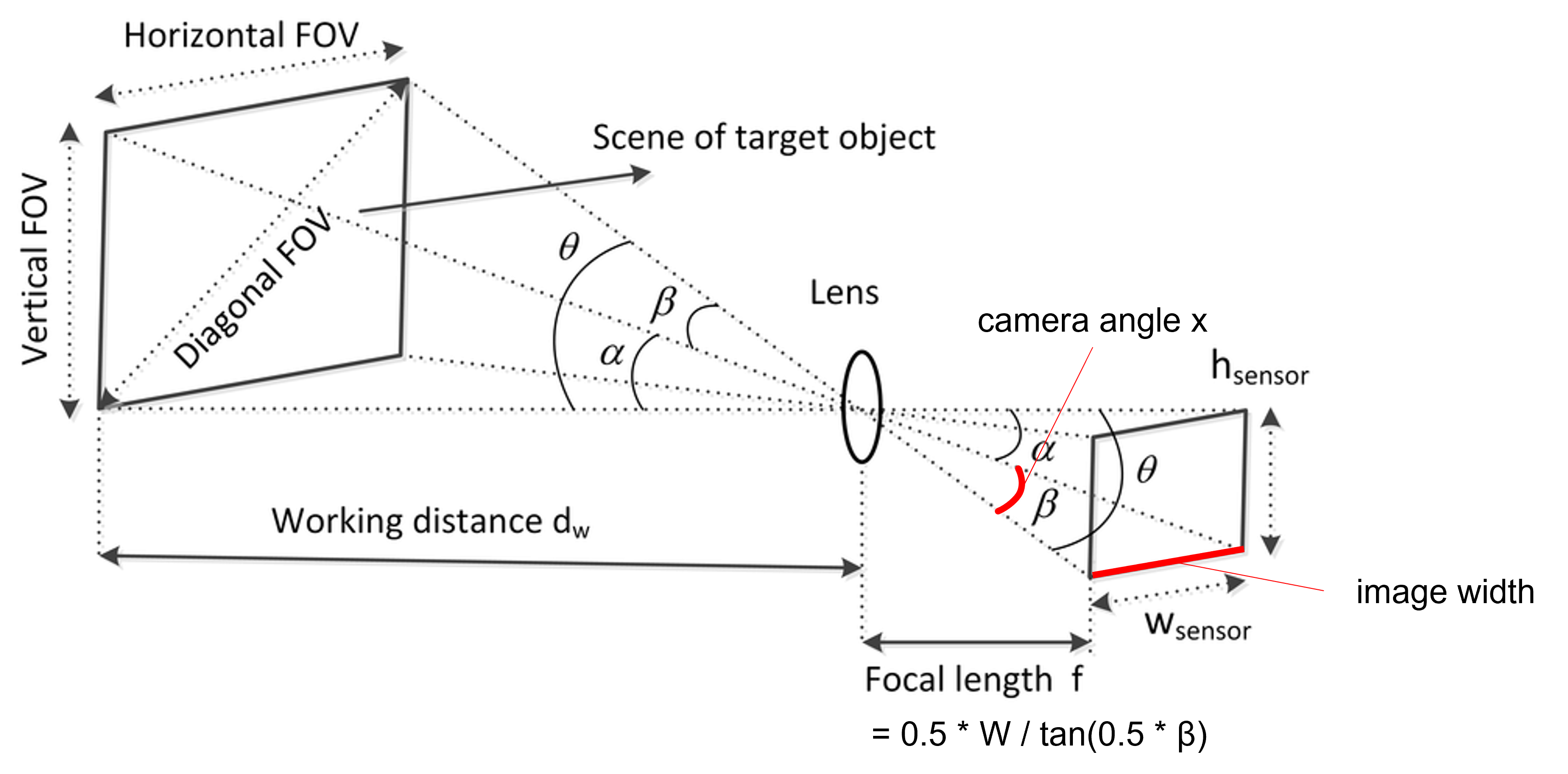

camera_angle_x : The FOV in x dimension

frames : List of dictionaries that contain the camera transform matrices for each image. → camera-to-world matrix삼각법을 이용한 초점 거리 계산

focalLength = get_focal_from_fov(fieldOfView=traindata["camera_angle_x"], width=config.IMAGE_WIDTH)

1 | |

tf.tan 텐서에 있는 모든 요소의 탄젠트를 계산

이미지의 경로와 camera to world transform matrix 얻기

trainImagePaths, trainC2Ws = get_image_c2w(jsonData=traindata, datasetPath=config.DATASET_PATH)

1 | |

디스크에서 이미지를 로드하는 데 사용되는 클래스의 개체를 인스턴스화

getImages = GetImages(imageHeight=config.IMAGE_HEIGHT, imageWidth=config.IMAGE_WIDTH)

trainImageDs = (tf.data.Dataset.from_tensor_slices(trainImagePaths).map(getImages, num_parallel_calls=config.AUTO))

1 | |

tf.io.decode_jpeg JPEG 인코딩 이미지를 uint8 텐서로 디코딩

tf.data.Dataset.from_tensor_slices tf.data.Dataset 를 생성하는 함수로 입력된 텐서로부터 slices를 생성

ray의 object 인스턴스화

getRays = GetRays(focalLength=focalLength, imageWidth=config.IMAGE_WIDTH, imageHeight=config.IMAGE_HEIGHT, NEAR_BOUNDS=config.NEAR_BOUNDS, FAR_BOUNDS=config.FAR_BOUNDS, nC=config.NUM_COARSE)

trainRayDs = (tf.data.Dataset.from_tensor_slices(trainC2Ws).map(getRays, num_parallel_calls=config.AUTO))

1 | |

https://keras.io/examples/vision/nerf/

-

tf.reduce_sum특정 차원을 제거한 후 구한 합계 -

tf.norm벡터, 행렬 및 텐서의 표준을 계산 -

tf.linspace주어진 축을 따라 일정한 간격의 값을 생성합니다

이미지 및 ray 데이터 세트를 함께 압축

: build data input pipeline for train, val, and test datasets

traindata = tf.data.Dataset.zip((trainRayDs, trainImageDs))

1 | |

Set Model

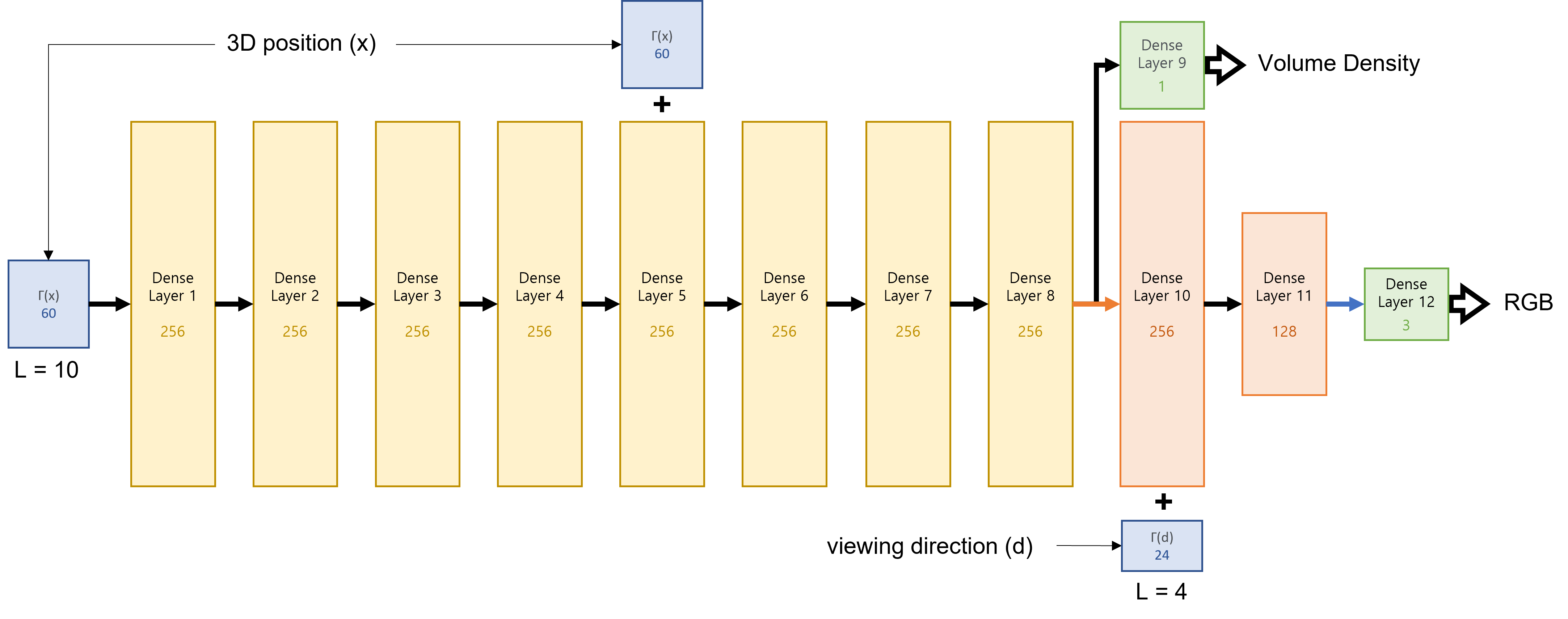

Instantiation of the Coarse Model & Fine Model

coarseModel = get_model(lxyz=config.DIMS_XYZ, lDir=config.DIMS_DIR, batchSize=config.BATCH_SIZE, denseUnits=config.UNITS, skipLayer=config.SKIP_LAYER)

fineModel = get_model(lxyz=config.DIMS_XYZ, lDir=config.DIMS_DIR, batchSize=config.BATCH_SIZE, denseUnits=config.UNITS, skipLayer=config.SKIP_LAYER)

1 | |

NerF trainer model

nerfModel = Nerf_Trainer(coarseModel=coarseModel, fineModel=fineModel, lxyz=config.DIMS_XYZ, lDir=config.DIMS_DIR, encoderFn=encoder_fn, renderImageDepth=render_image_depth, samplePdf=sample_pdf, nF=config.NUM_FINE)

1 | |

1 | |

1 | |

1 | |

1 | |

1 | |

Train Model

Compiling the Model (optimizer used : Adam, Loss Function : Mean Squared Error)

nerfModel.compile(optimizerCoarse=Adam(),optimizerFine=Adam(),lossFn=MeanSquaredError())

모델 학습 및 평가 결과를 추적하도록 모니터 콜백을 교육

trainMonitorCallback = get_train_monitor(testDs=testdata,encoderFn=encoder_fn, lxyz=config.DIMS_XYZ, lDir=config.DIMS_DIR, imagePath=config.IMAGE_PATH)

1 | |

NERF Model training

nerfModel.fit(traindata, steps_per_epoch=config.STEPS_PER_EPOCH, validation_data=valdata, validation_steps=config.VALIDATION_STEPS, epochs=config.EPOCHS, callbacks=[trainMonitorCallback],)

Saving the model parameters

nerfModel.coarseModel.save(config.COARSE_PATH)

nerfModel.fineModel.save(config.FINE_PATH)

참고

- https://keras.io/examples/vision/nerf/